University of San Diego AAI-511: Neural Networks and Deep Learning

Professor: Rod Albuyeh

Section: 5

Group: 2

Contributors:

- Ashley Moore

- Kevin Pooler

- Swapnil Patil

August, 2025

Project Overview¶

Classical music represents one of humanity's most sophisticated artistic achievements, with master composers like Bach, Beethoven, Chopin, and Mozart developing distinctive musical signatures that have influenced centuries of musical evolution. Each composer possesses unique characteristics in their compositional style from Bach's intricate counterpoint and mathematical precision to Chopin's romantic expressiveness and innovative harmonies. These stylistic fingerprints, embedded within the very fabric of their musical works, present an fascinating opportunity for artificial intelligence to learn and recognize patterns that define musical authorship.

The Challenge¶

Traditional musicological analysis relies heavily on human expertise to identify compositional styles, requiring years of specialized training to distinguish between composers based on harmonic progressions, melodic patterns, rhythmic structures, and formal organization. However, with the advent of digital music representation and deep learning technologies, we can now approach this problem computationally, extracting and analyzing musical features at a scale and precision impossible for human analysis alone.

Approach¶

This project introduces a novel hybrid deep learning architecture that combines the sequential pattern recognition capabilities of Long Short-Term Memory (LSTM) networks with the spatial feature extraction power of Convolutional Neural Networks (CNNs) to automatically classify musical compositions by their composers. Our methodology integrates three distinct feature extraction approaches:

- Musical Features: Traditional music theory elements including pitch distributions, harmonic progressions, tempo variations, and rhythmic patterns

- Harmonic Features: Advanced harmonic analysis capturing chord progressions, key relationships, and tonal structures

- Sequential Features: Time-series representation of musical events, preserving the temporal relationships crucial to compositional style

Innovation and Impact¶

By processing MIDI files through sophisticated feature engineering and applying state-of-the-art neural network architectures, this system demonstrates how machine learning can complement musicological research, offering new insights into compositional analysis while providing practical applications for music education, digital humanities, and automated music cataloging.

This work represents a significant step toward understanding how artificial intelligence can capture and interpret the subtle nuances that define artistic style, with implications extending beyond music to other domains of creative expression and cultural analysis.

Objective¶

Develop a hybrid LSTM-CNN deep learning model to classify classical music compositions by composer (Bach, Beethoven, Chopin, Mozart) using multi-modal feature extraction from MIDI files.

Key Components¶

- Feature Engineering: Musical, harmonic, and sequential feature extraction

- Architecture: Hybrid LSTM-CNN fusion network for temporal and spatial pattern recognition

- Evaluation: Cross-validation with accuracy, precision, recall, and F1-score metrics

- Target: >85% classification accuracy with model interpretability analysis

Deliverables¶

- Multi-modal feature extraction pipeline

- Hybrid neural network architecture

- Trained composer classification model

- Performance evaluation and optimization results

The project will use a dataset consisting of musical scores from various composers.

https://www.kaggle.com/datasets/blanderbuss/midi-classic-music

The dataset contains the midi files of compositions from well-known classical composers like Bach, Beethoven, Chopin, and Mozart. The dataset should be labeled with the name of the composer for each score. Please only do your prediction only for below composers, therefore you need to select the required composers from the given dataset above.

- Bach

- Beethoven

- Chopin

- Mozart

%pip install -q -r requirements.txt

%load_ext autoreload

%autoreload 2

^C Note: you may need to restart the kernel to use updated packages. The autoreload extension is already loaded. To reload it, use: %reload_ext autoreload

[notice] A new release of pip is available: 25.1.1 -> 25.2 [notice] To update, run: python.exe -m pip install --upgrade pip

# Toggle the following flags to control the behavior of the notebook

EXTRACT_FEATURES = False

SAMPLING = False

SAMPLING_FILES_PER_COMPOSER = 20

# System and utility imports

import sys

import os

import time

import warnings

import pickle

import hashlib

from functools import lru_cache

from collections import Counter, defaultdict

from concurrent.futures import ThreadPoolExecutor, as_completed

from multiprocessing import cpu_count

from tqdm import tqdm

# Data processing libraries

import numpy as np

import pandas as pd

# Visualization libraries

import matplotlib.pyplot as plt

import seaborn as sns

from mpl_toolkits.mplot3d import Axes3D

# Music processing libraries

import music21

from music21 import (

converter, note, chord, roman, key, tempo, instrument,

interval, pitch, stream

)

import pypianoroll

import pretty_midi

import librosa

import librosa.display

# Deep learning libraries

import torch

import tensorflow as tf

# Add parent directory to path

warnings.filterwarnings('ignore')

sys.path.append(os.path.abspath(os.path.join(os.pardir, 'src')))

class EnvironmentSetup:

"""

Centralized environment configuration class for faster music classification.

Handles all environment setup including GPU configuration, reproducibility,

and optimization settings.

"""

def __init__(self):

self.random_seed = 42

self.optimal_workers = None

self.gpu_configured = False

self.cuda_available = False

self.config_summary = []

self.force_gpu = True # New flag to force GPU usage when available

self.cuda_device_id = 0 # Default CUDA device ID to use

self.color_pallete = "viridis"

def configure_environment(self):

"""Configure all environment settings for optimal performance and

reproducibility."""

# Configure reproducibility

self._configure_reproducibility()

# Configure GPU and memory settings

self._configure_gpu_and_memory()

# Configure CPU workers

self._configure_cpu_workers()

# Configure visualization settings

self._configure_visualization()

# Configure pandas settings

self._configure_pandas()

# Configure warnings

self._configure_warnings()

# Print final configuration summary

self._print_configuration_summary()

def _configure_reproducibility(self):

"""Set random seeds for reproducible results."""

np.random.seed(self.random_seed)

tf.random.set_seed(self.random_seed)

self.config_summary.append(f"Random seeds set to {self.random_seed} for reproducibility")

def _configure_gpu_and_memory(self):

"""Configure GPU settings and memory management for optimal performance."""

try:

# Check for CUDA availability first

self.cuda_available = torch.cuda.is_available()

if self.cuda_available:

cuda_version = torch.version.cuda

device_count = torch.cuda.device_count()

self.config_summary.append(f"CUDA is available (version: {cuda_version}, devices: {device_count})")

# Set CUDA device

if self.cuda_device_id < device_count:

torch.cuda.set_device(self.cuda_device_id)

self.config_summary.append(f"Set active CUDA device to: {torch.cuda.get_device_name(self.cuda_device_id)}")

# Set seeds for reproducibility

torch.cuda.manual_seed(self.random_seed)

torch.cuda.manual_seed_all(self.random_seed)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = True # Enable for improved performance with fixed input sizes

self.config_summary.append(f"CUDA random seed set to {self.random_seed}")

self.config_summary.append(f"CUDA deterministic mode: enabled")

self.config_summary.append(f"CUDA benchmark mode: enabled")

# Export CUDA device for other libraries

os.environ["CUDA_VISIBLE_DEVICES"] = str(self.cuda_device_id)

self.config_summary.append(f"Set CUDA_VISIBLE_DEVICES={self.cuda_device_id}")

else:

self.config_summary.append("CUDA is not available")

# Check for GPU availability in TensorFlow

gpus = tf.config.experimental.list_physical_devices('GPU')

if gpus:

self.config_summary.append(f"Found {len(gpus)} GPU(s)")

if self.force_gpu:

# Force TensorFlow to use GPU

tf.config.set_visible_devices(gpus, 'GPU')

self.config_summary.append("Forced TensorFlow to use GPU")

# Configure memory growth to prevent TensorFlow from allocating all GPU memory

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

self.config_summary.append(f"Configured memory growth for GPU: {gpu.name}")

# Set mixed precision for better performance on modern GPUs

tf.config.optimizer.set_jit(True) # Enable XLA compilation

policy = tf.keras.mixed_precision.Policy('mixed_float16')

tf.keras.mixed_precision.set_global_policy(policy)

self.config_summary.append("Enabled mixed precision training (float16)")

self.config_summary.append("Enabled XLA compilation for faster execution")

# Configure GPU device placement

tf.config.experimental.set_device_policy('explicit')

self.config_summary.append("Set explicit device placement policy")

self.gpu_configured = True

else:

self.config_summary.append("GPU available but not forced (force_gpu=False)")

else:

self.config_summary.append("No GPU found in TensorFlow. Using CPU for computation.")

# Configure CPU optimizations

tf.config.threading.set_intra_op_parallelism_threads(0) # Use all available cores

tf.config.threading.set_inter_op_parallelism_threads(0) # Use all available cores

self.config_summary.append("Configured CPU threading for optimal performance")

except Exception as e:

self.config_summary.append(f"Error configuring GPU: {e}")

self.config_summary.append("Falling back to default CPU configuration")

def set_cuda_device(self, device_id=0):

self.cuda_device_id = device_id

def toggle_force_gpu(self, force=True):

self.force_gpu = force

def _configure_cpu_workers(self):

"""Configure optimal number of CPU workers for parallel processing."""

# Set optimal number of workers based on CPU cores

self.optimal_workers = min(cpu_count(), 12) # Cap at 12 to avoid memory issues

# Make it globally accessible

globals()['OPTIMAL_WORKERS'] = self.optimal_workers

self.config_summary.append(f"Configured {self.optimal_workers} workers for parallel processing (CPU cores: {cpu_count()})")

def _configure_visualization(self):

"""Configure matplotlib and seaborn settings."""

sns.set(style='whitegrid')

plt.style.use('default')

plt.ioff() # Turn off interactive mode for better performance

self.config_summary.append("Visualization settings configured (seaborn whitegrid, matplotlib default)")

self.color_pallete = sns.color_palette("viridis")

def _configure_pandas(self):

"""Configure pandas display options."""

pd.set_option('display.max_columns', None)

pd.set_option('display.width', None)

pd.set_option('display.max_rows', 100)

self.config_summary.append("Pandas display options configured (max_columns=None, max_rows=100)")

def _configure_warnings(self):

"""Suppress unnecessary warnings."""

warnings.filterwarnings('ignore')

self.config_summary.append("Warning filters configured (suppressed for cleaner output)")

def _print_configuration_summary(self):

"""Print a summary of the current configuration."""

print("=" * 60)

print("ENVIRONMENT CONFIGURATION SUMMARY")

print("=" * 60)

for item in self.config_summary:

print(item)

print("\nCONFIGURATION DETAILS:")

print(f"Random Seed: {self.random_seed}")

print(f"CPU Workers: {self.optimal_workers}")

print(f"GPU Configured: {self.gpu_configured}")

print(f"CUDA Available: {self.cuda_available}")

print(f"Force GPU: {self.force_gpu}")

if self.cuda_available:

print(f"Active CUDA Device: {torch.cuda.get_device_name(self.cuda_device_id)}")

print(f"CUDA Memory Allocated: {torch.cuda.memory_allocated(self.cuda_device_id) / 1024**2:.2f} MB")

print(f"CUDA Memory Reserved: {torch.cuda.memory_reserved(self.cuda_device_id) / 1024**2:.2f} MB")

if self.gpu_configured:

gpus = tf.config.experimental.list_physical_devices('GPU')

for i, gpu in enumerate(gpus):

print(f"GPU {i}: {gpu.name}")

print(f"TensorFlow Version: {tf.__version__}")

print(f"PyTorch Version: {torch.__version__}")

print(f"NumPy Version: {np.__version__}")

print(f"Pandas Version: {pd.__version__}")

print("=" * 60)

# Initialize and configure environment

env_config = EnvironmentSetup()

env_config.configure_environment()

OPTIMAL_WORKERS = env_config.optimal_workers

============================================================ ENVIRONMENT CONFIGURATION SUMMARY ============================================================ Random seeds set to 42 for reproducibility CUDA is not available No GPU found in TensorFlow. Using CPU for computation. Configured CPU threading for optimal performance Configured 12 workers for parallel processing (CPU cores: 20) Visualization settings configured (seaborn whitegrid, matplotlib default) Pandas display options configured (max_columns=None, max_rows=100) Warning filters configured (suppressed for cleaner output) CONFIGURATION DETAILS: Random Seed: 42 CPU Workers: 12 GPU Configured: False CUDA Available: False Force GPU: True TensorFlow Version: 2.20.0-rc0 PyTorch Version: 2.7.1+cpu NumPy Version: 2.2.6 Pandas Version: 2.3.1 ============================================================

The EnvironmentSetup class centralizes system configuration for optimal deep learning performance, automatically detecting and configuring GPU/CPU resources, setting reproducibility seeds, and optimizing memory management. This ensures consistent results across different hardware environments while maximizing computational efficiency for our neural network training.# Initialize and configure environment

try:

from google.colab import drive

COLAB_DRIVE_PATH = '/content/drive'

drive.mount(COLAB_DRIVE_PATH)

DATA_DIRECTORY = f"{COLAB_DRIVE_PATH}/MyDrive/data"

MUSIC_DATA_DIRECTORY = f"{DATA_DIRECTORY}/midiclassics"

IS_COLAB = True

print(f"Google Drive mounted at {COLAB_DRIVE_PATH}")

except:

DATA_DIRECTORY = "data"

MUSIC_DATA_DIRECTORY = f"{DATA_DIRECTORY}\\midiclassics"

IS_COLAB = False

print("Running in local environment, not Google Colab")

MUSICAL_FEATURES_DF_PATH = f'{DATA_DIRECTORY}/features/musical_features_df.pkl'

HARMONIC_FEATURES_DF_PATH = f'{DATA_DIRECTORY}/features/harmonic_features_df.pkl'

NOTE_SEQUENCES_PATH = f'{DATA_DIRECTORY}/features/note_sequences.npy'

NOTE_SEQUENCES_LABELS_PATH = f'{DATA_DIRECTORY}/features/sequence_labels.npy'

NOTE_MAPPING_PATH = f'{DATA_DIRECTORY}/features/note_mapping.pkl'

MODEL_ARTIFACTS_PATH = f'{DATA_DIRECTORY}/model/composer_classification_model_artifacts.pkl'

MODEL_HISTORY_PATH = f'{DATA_DIRECTORY}/model/composer_classification_model_history.pkl'

MODEL_PATH = f'{DATA_DIRECTORY}/model/composer_classification_model.keras'

MODEL_BEST_PATH = f'{DATA_DIRECTORY}/model/composer_classification_model_best.keras'

# List of target composers for classification

TARGET_COMPOSERS = ['Bach', 'Beethoven', 'Chopin', 'Mozart']

print(f"Target composers for classification: {TARGET_COMPOSERS}")

Running in local environment, not Google Colab Target composers for classification: ['Bach', 'Beethoven', 'Chopin', 'Mozart']

This project aims to develop an accurate deep learning–based system for composer classification, using MIDI data as the primary input. To achieve this, we implement a MidiProcessor class (below) that streamlines the end-to-end preprocessing pipeline. It includes the following:

Scanning the MIDI dataset

load_midi_files()searches through composer-specific folders, recursively finding.midand.midifiles.- Uses parallel processing via

ThreadPoolExecutorto scan multiple composers at once. - Returns a

pandasDataFrame containing file paths, composer labels, and filenames for downstream processing.

Extracting notes from MIDI files

extract_notes_from_midi()parses a single MIDI file, extracting individual notes and chords (converted into pitch strings).- Limits extraction to a maximum number of notes for efficiency.

- Includes fallback handling for flat MIDI structures and skips empty or corrupted files.

extract_notes_from_midi_parallel()enables batch note extraction in parallel with a progress bar for large datasets.

Extracting detailed musical features

extract_musical_features()analyzes each MIDI file to gather rich metadata:- Total notes, total duration, time signature, key and confidence, tempo.

- Pitch statistics (average, range, standard deviation), intervals, note durations.

- Structural features like number of parts, measures, chords, rests, rest ratios, and average notes per measure.

- Instrument detection and chord type analysis.

extract_musical_features_parallel()runs this process in parallel for scalability.

Creating note sequences for deep learning models

create_note_sequences()builds a vocabulary of unique notes from the dataset.- Converts notes into integer representations and slices them into fixed-length sequences (e.g., 100-note windows) for LSTM/CNN model training.

- Includes batching and memory safeguards to handle large datasets efficiently.

Preprocessing and filtering the dataset

preprocess_midi_files()filters out invalid, duplicate, corrupted, too-short, or low-key-confidence MIDI files.- Uses file hashing to detect duplicates and minimum thresholds for duration and key confidence.

- Tracks processing statistics to provide a clear summary of dataset quality after filtering.

class MidiProcessor:

def __init__(self):

self._file_cache = {} # Cache for parsed files

self._feature_cache = {} # Cache for extracted features

def load_midi_files(self, data_dir, max_files_per_composer=None, n_jobs=None):

if n_jobs is None:

n_jobs = OPTIMAL_WORKERS

start_time = time.time()

# Get all composer directories

composers = [c for c in os.listdir(data_dir)

if os.path.isdir(os.path.join(data_dir, c))]

def scan_composer(composer):

composer_path = os.path.join(data_dir, composer)

try:

# Get all MIDI files with recursive search

midi_files = []

for root, dirs, files in os.walk(composer_path):

for file in files:

if file.lower().endswith(('.mid', '.midi')):

# Get path relative to composer_path

rel_path = os.path.relpath(os.path.join(root, file), composer_path)

midi_files.append(rel_path)

# Apply limit if specified

if max_files_per_composer:

midi_files = midi_files[:max_files_per_composer]

# Create file info list

return [

{

'file_path': os.path.join(composer_path, midi_file),

'composer': composer,

'filename': midi_file

}

for midi_file in midi_files

]

except Exception as e:

print(f"Error scanning {composer}: {e}")

return []

# Parallel composer scanning

all_files = []

with ThreadPoolExecutor(max_workers=n_jobs) as executor:

futures = [executor.submit(scan_composer, composer) for composer in composers]

for future in as_completed(futures):

composer_files = future.result()

all_files.extend(composer_files)

elapsed = time.time() - start_time

print(f"Loaded {len(all_files)} MIDI files from {len(composers)} composers in {elapsed:.2f}s")

return pd.DataFrame(all_files)

@staticmethod

def extract_notes_from_midi(midi_path, max_notes=500):

try:

# Parse once and extract efficiently

midi = converter.parse(midi_path)

notes = []

# Note extraction with limits

if hasattr(midi, 'parts') and midi.parts:

# Process only first few parts for speed

for part in midi.parts[:2]: # Limit to 2 parts

part_notes = part.recurse().notes

for element in part_notes:

if len(notes) >= max_notes:

break

if isinstance(element, note.Note):

notes.append(str(element.pitch))

elif isinstance(element, chord.Chord):

notes.append('.'.join(str(n) for n in element.normalOrder))

if len(notes) >= max_notes:

break

else:

# Flat structure

for element in midi.flat.notes:

if len(notes) >= max_notes:

break

if isinstance(element, note.Note):

notes.append(str(element.pitch))

elif isinstance(element, chord.Chord):

notes.append('.'.join(str(n) for n in element.normalOrder))

return notes[:max_notes] # Ensure we don't exceed limit

except Exception as e:

print(f"Error processing {midi_path}: {str(e)}")

return []

@staticmethod

def extract_notes_batch(midi_paths_batch, max_notes=500):

results = []

for midi_path in midi_paths_batch:

notes = MidiProcessor.extract_notes_from_midi(midi_path, max_notes)

results.append(notes)

return results

@staticmethod

def extract_notes_from_midi_parallel(midi_paths, max_notes=500, n_jobs=None):

if n_jobs is None:

n_jobs = OPTIMAL_WORKERS

total_files = len(midi_paths)

# Create batches for better memory management

batch_size = max(1, len(midi_paths) // (n_jobs * 2))

batches = [midi_paths[i:i + batch_size] for i in range(0, len(midi_paths), batch_size)]

results = [None] * len(midi_paths)

# Create a progress bar

pbar = tqdm(total=total_files, desc="Extracting notes from MIDI files")

def process_batch(batch_data):

batch_idx, batch_paths = batch_data

batch_results = MidiProcessor.extract_notes_batch(batch_paths, max_notes)

# Update progress bar for each file in the batch

pbar.update(len(batch_paths))

return batch_idx, batch_results

try:

# Process batches in parallel

with ThreadPoolExecutor(max_workers=n_jobs) as executor:

batch_data = [(i, batch) for i, batch in enumerate(batches)]

futures = [executor.submit(process_batch, data) for data in batch_data]

for future in as_completed(futures):

batch_idx, batch_results = future.result()

batch_start = batch_idx * batch_size

for i, notes in enumerate(batch_results):

if batch_start + i < len(results):

results[batch_start + i] = notes

finally:

# Make sure to close the progress bar

pbar.close()

# Report completion stats

successful = sum(1 for r in results if r is not None and len(r) > 0)

print(f"\nNote extraction complete: {successful}/{total_files} files processed successfully")

return results

@staticmethod

def extract_musical_features(midi_path):

try:

midi = converter.parse(midi_path)

features = {}

# Basic counts

all_notes = list(midi.flat.notes)

features['total_notes'] = len(all_notes)

features['total_duration'] = float(midi.duration.quarterLength) if midi.duration else 0

# Time signature (quick lookup)

time_sigs = midi.getTimeSignatures()

if time_sigs:

features['time_signature'] = f"{time_sigs[0].numerator}/{time_sigs[0].denominator}"

else:

features['time_signature'] = "4/4"

# Key analysis

try:

key_sigs = midi.analyze('key')

features['key'] = str(key_sigs) if key_sigs else "C major"

if hasattr(key_sigs, 'correlationCoefficient'):

features['key_confidence'] = float(abs(key_sigs.correlationCoefficient))

else:

features['key_confidence'] = 0.5

except:

features['key'] = "C major"

features['key_confidence'] = 0.5

# Tempo

tempo_markings = midi.flat.getElementsByClass('MetronomeMark')

features['tempo'] = float(tempo_markings[0].number) if tempo_markings else 120.0

# Pitch features (vectorized calculation)

pitches = []

durations = []

for n in all_notes[:1000]: # Limit for speed

if isinstance(n, note.Note):

pitches.append(n.pitch.midi)

durations.append(float(n.duration.quarterLength))

elif isinstance(n, chord.Chord):

pitches.append(n.root().midi)

durations.append(float(n.duration.quarterLength))

if pitches:

pitches_array = np.array(pitches)

features['avg_pitch'] = float(np.mean(pitches_array))

features['pitch_range'] = float(np.ptp(pitches_array))

features['pitch_std'] = float(np.std(pitches_array))

# Intervals (vectorized)

if len(pitches) > 1:

intervals = np.abs(np.diff(pitches_array))

features['avg_interval'] = float(np.mean(intervals))

features['interval_std'] = float(np.std(intervals))

else:

features['avg_interval'] = 0.0

features['interval_std'] = 0.0

else:

features.update({

'avg_pitch': 60.0, 'pitch_range': 0.0, 'pitch_std': 0.0,

'avg_interval': 0.0, 'interval_std': 0.0

})

# Duration features (vectorized)

if durations:

dur_array = np.array(durations)

features['avg_duration'] = float(np.mean(dur_array))

features['duration_std'] = float(np.std(dur_array))

else:

features['avg_duration'] = 1.0

features['duration_std'] = 0.0

# Structural features (limited for speed)

features['num_parts'] = len(midi.parts) if hasattr(midi, 'parts') else 1

# Count specific elements (limited)

limited_elements = list(midi.flat.notesAndRests)[:500] # Limit for speed

chords = [el for el in limited_elements if isinstance(el, chord.Chord)]

rests = [el for el in limited_elements if isinstance(el, note.Rest)]

features['num_chords'] = len(chords)

features['num_rests'] = len(rests)

features['rest_ratio'] = len(rests) / features['total_notes'] if features['total_notes'] > 0 else 0

# Measures (efficient approximation)

if hasattr(midi, 'parts') and midi.parts:

measures = list(midi.parts[0].getElementsByClass('Measure'))

features['num_measures'] = len(measures) if measures else max(1, int(features['total_duration'] / 4))

else:

features['num_measures'] = max(1, int(features['total_duration'] / 4))

features['avg_notes_per_measure'] = features['total_notes'] / features['num_measures'] if features['num_measures'] > 0 else 0

# Instruments (simplified)

instruments = []

if hasattr(midi, 'parts') and midi.parts:

for part in midi.parts[:3]: # Limit to first 3 parts

try:

instr = part.getInstrument(returnDefault=True)

instruments.append(str(instr))

except:

instruments.append('Piano')

else:

instruments = ['Piano']

features['instruments'] = list(set(instruments))

# Chord types (limited analysis)

chord_types = []

for c in chords[:10]: # Limit to first 10 chords

try:

chord_types.append(c.commonName)

except:

chord_types.append('Unknown')

features['chord_types'] = list(set(chord_types))

return features

except Exception as e:

print(f"Error extracting features from {midi_path}: {str(e)}")

return {

'total_notes': 0, 'total_duration': 0, 'time_signature': '4/4',

'key': 'C major', 'key_confidence': 0, 'tempo': 120.0,

'avg_pitch': 60.0, 'pitch_range': 0, 'pitch_std': 0,

'avg_duration': 1.0, 'duration_std': 0,

'avg_interval': 0, 'interval_std': 0, 'num_parts': 1,

'num_chords': 0, 'num_rests': 0, 'rest_ratio': 0,

'num_measures': 1, 'avg_notes_per_measure': 0,

'instruments': ['Piano'], 'chord_types': []

}

@staticmethod

def extract_musical_features_parallel(midi_paths, n_jobs=None):

if n_jobs is None:

n_jobs = OPTIMAL_WORKERS

total_files = len(midi_paths)

results = [None] * total_files

# Create a progress bar

pbar = tqdm(total=total_files, desc="Extracting musical features")

def worker(idx, midi_path):

try:

features = MidiProcessor.extract_musical_features(midi_path)

return idx, features

except Exception as e:

print(f"\nError processing {midi_path}: {e}")

return idx, MidiProcessor.extract_musical_features(None) # Return empty features

finally:

# Update progress bar

pbar.update(1)

try:

with ThreadPoolExecutor(max_workers=n_jobs) as executor:

# Submit all tasks

futures = [executor.submit(worker, idx, path) for idx, path in enumerate(midi_paths)]

# Process results as they complete

for future in as_completed(futures):

try:

idx, features = future.result()

results[idx] = features

except Exception as e:

print(f"\nError in feature extraction worker: {e}")

finally:

# Make sure to close the progress bar

pbar.close()

# Report completion stats

successful = sum(1 for r in results if r is not None and r.get('total_notes', 0) > 0)

print(f"\nFeature extraction complete: {successful}/{total_files} files processed successfully")

return results

@staticmethod

def create_note_sequences(notes_list, sequence_length=100):

print("Building vocabulary...")

# Vocabulary building

all_notes = []

for notes in notes_list:

if notes: # Skip empty note lists

all_notes.extend(notes[:1000]) # Limit notes per file for speed

unique_notes = sorted(set(all_notes))

note_to_int = {note: i for i, note in enumerate(unique_notes)}

print(f"Vocabulary size: {len(unique_notes)}")

print("Creating sequences...")

# Create sequences with memory optimization

sequences = []

batch_size = 1000

for i in range(0, len(notes_list), batch_size):

batch_notes = notes_list[i:i + batch_size]

batch_sequences = []

for notes in batch_notes:

if len(notes) >= sequence_length:

# Convert notes to integers (with error handling)

note_ints = []

for note in notes:

if note in note_to_int:

note_ints.append(note_to_int[note])

# Create sequences from this file

for j in range(len(note_ints) - sequence_length + 1):

seq = note_ints[j:j + sequence_length]

if len(seq) == sequence_length: # Ensure correct length

batch_sequences.append(seq)

sequences.extend(batch_sequences)

# Progress update

if i % (batch_size * 10) == 0:

print(f"Processed {min(i + batch_size, len(notes_list))}/{len(notes_list)} files, "

f"created {len(sequences)} sequences")

# Memory cleanup

if len(sequences) > 50000: # Prevent memory overflow

break

print(f"Total sequences created: {len(sequences)}")

return np.array(sequences), note_to_int, unique_notes

@staticmethod

def preprocess_midi_files(file_df, min_duration=5.0, min_key_confidence=0.7, verbose=True, n_jobs=None):

if n_jobs is None:

n_jobs = OPTIMAL_WORKERS

processed_files = []

seen_hashes = set()

total = len(file_df)

stats = {

'total': total,

'corrupted': 0,

'zero_length': 0,

'duplicates': 0,

'too_short': 0,

'ambiguous_key': 0,

'kept': 0

}

def process_file(row_data):

idx, row = row_data

path = row['file_path']

try:

# Quick file validation

if not os.path.exists(path) or os.path.getsize(path) == 0:

return ('zero_length', None)

# File hash for deduplication

with open(path, 'rb') as f:

file_hash = hashlib.md5(f.read()).hexdigest()

# Basic MIDI parsing

score = converter.parse(path)

duration = score.duration.seconds if score.duration else 0

if duration < min_duration:

return ('too_short', None)

# Key confidence check (if needed)

if min_key_confidence > 0:

try:

key_obj = score.analyze('key')

if hasattr(key_obj, 'correlationCoefficient'):

confidence = abs(key_obj.correlationCoefficient)

else:

confidence = 1.0

if confidence < min_key_confidence:

return ('ambiguous_key', None)

except:

return ('ambiguous_key', None)

return ('kept', (file_hash, row))

except Exception as e:

return ('corrupted', None)

completed = 0

with ThreadPoolExecutor(max_workers=n_jobs) as executor:

row_data = [(idx, row) for idx, row in file_df.iterrows()]

futures = [executor.submit(process_file, data) for data in row_data]

for future in as_completed(futures):

result, data = future.result()

completed += 1

if verbose and completed % 50 == 0:

percent = (completed / total) * 100

print(f"\rProcessing: {completed}/{total} ({percent:.1f}%)", end='', flush=True)

if result == 'kept' and data:

file_hash, row = data

if file_hash not in seen_hashes:

seen_hashes.add(file_hash)

processed_files.append(row)

stats['kept'] += 1

else:

stats['duplicates'] += 1

else:

stats[result] += 1

if verbose:

print() # New line after progress

print("\nPreprocessing Results:")

for key, value in stats.items():

print(f"{key.replace('_', ' ').title()}: {value}")

return pd.DataFrame(processed_files)

The MidiProcessor above addresses the challenges of preparing a large, multi-class MIDI dataset.

Manual data cleaning for such datasets can be slow and prone to error, especially when handling files from different sources with varying lengths, complexities, and numerous features. By automating our MIDI dataset scanning, parallelized note and feature extraction, sequence creation, and filtering, this pipeline provides us with quality input data that captures some key musical attributes necessary for training LSTM and CNN models.

The next preprocessing step initializes the MidiProcessor and prepares the dataset for deep learning–based composer classification. The pipeline:

Initialization & Metadata Loading

- Creates a

MidiProcessorinstance and loads all MIDI file metadata into a Pandas DataFrame from the specified dataset directory.

- Creates a

Filtering Target Composers

- Restricts the dataset to a predefined set of composers (

TARGET_COMPOSERS) and removes any files with missing or invalid paths.

- Restricts the dataset to a predefined set of composers (

Dataset Overview

- Counts and prints the total number of MIDI files before and after filtering to track dataset size and integrity.

Data Visualization

- Generates two plots for quick dataset inspection:

- Bar Chart: Displays the number of valid MIDI files for each target composer, with counts labeled on the bars.

- Pie Chart: Shows the proportion of MIDI files per composer, giving a visual breakdown of class distribution.

- Generates two plots for quick dataset inspection:

Justification:

These steps ensure that the dataset used for training is both relevant (only target composers) and valid (no broken paths or duplicates). The visualizations provide an immediate overview of data balance, which is crucial for understanding potential class imbalance issues before model training.

# Initialize MidiProcessor

midi_processor = MidiProcessor()

# Load MIDI file metadata into a DataFrame

all_midi_df = midi_processor.load_midi_files(MUSIC_DATA_DIRECTORY)

# Define colors dictionary for visualization

colors = dict(zip(TARGET_COMPOSERS, sns.color_palette(env_config.color_pallete, len(TARGET_COMPOSERS))))

print(f"Total MIDI files found: {len(all_midi_df)}")

# Filter for required composers only

midi_df = all_midi_df[all_midi_df['composer'].isin(TARGET_COMPOSERS)].reset_index(drop=True)

print(f"Filtered MIDI files for target composers ({len(TARGET_COMPOSERS)}): {len(midi_df)}")

# Remove files with missing or invalid paths

midi_df = midi_df[midi_df['file_path'].apply(os.path.exists)].reset_index(drop=True)

# Preprocess MIDI files (clean, deduplicate, filter)

# midi_df = midi_processor.preprocess_midi_files(midi_df)

print(f"Valid MIDI files after preprocessing: {len(midi_df)}")

# Count files for required composers

required_composer_counts = midi_df['composer'].value_counts()

# Close any existing plots to start fresh

plt.close('all')

fig, axs = plt.subplots(1, 2, figsize=(12, 4))

# Plot 1: Bar chart of target composers

sns.barplot(

x=required_composer_counts.index,

y=required_composer_counts.values,

palette=env_config.color_pallete,

ax=axs[0]

)

axs[0].set_title('MIDI Files per Composer', fontsize=14)

axs[0].set_xlabel('Composer', fontsize=12)

axs[0].set_ylabel('Number of Files', fontsize=12)

# Add count numbers on top of the bars

for i, count in enumerate(required_composer_counts.values):

percentage = 100 * count / required_composer_counts.sum()

axs[0].text(i, count + 5, f"{count}", ha='center')

# Plot 2: Pie chart showing proportion of composers

axs[1].pie(

required_composer_counts.values,

labels=required_composer_counts.index,

autopct='%1.1f%%',

startangle=90,

colors=[colors[composer] for composer in required_composer_counts.index],

shadow=True

)

axs[1].set_title('Proportion of MIDI Files by Composer', fontsize=14)

axs[1].axis('equal')

plt.tight_layout()

plt.show()

Loaded 1643 MIDI files from 4 composers in 0.02s Total MIDI files found: 1643 Filtered MIDI files for target composers (4): 1643 Valid MIDI files after preprocessing: 1643

# To handle the data imbalance, we can sample a fixed number of files per composer

if SAMPLING == True:

midi_df = midi_df.groupby('composer').head(SAMPLING_FILES_PER_COMPOSER).reset_index(drop=True)

print(f"Reduced MIDI files to {SAMPLING_FILES_PER_COMPOSER} per composer: Total Files: {len(midi_df)}")

else:

print("Sampling is disabled, using all available MIDI files.")

Sampling is disabled, using all available MIDI files.

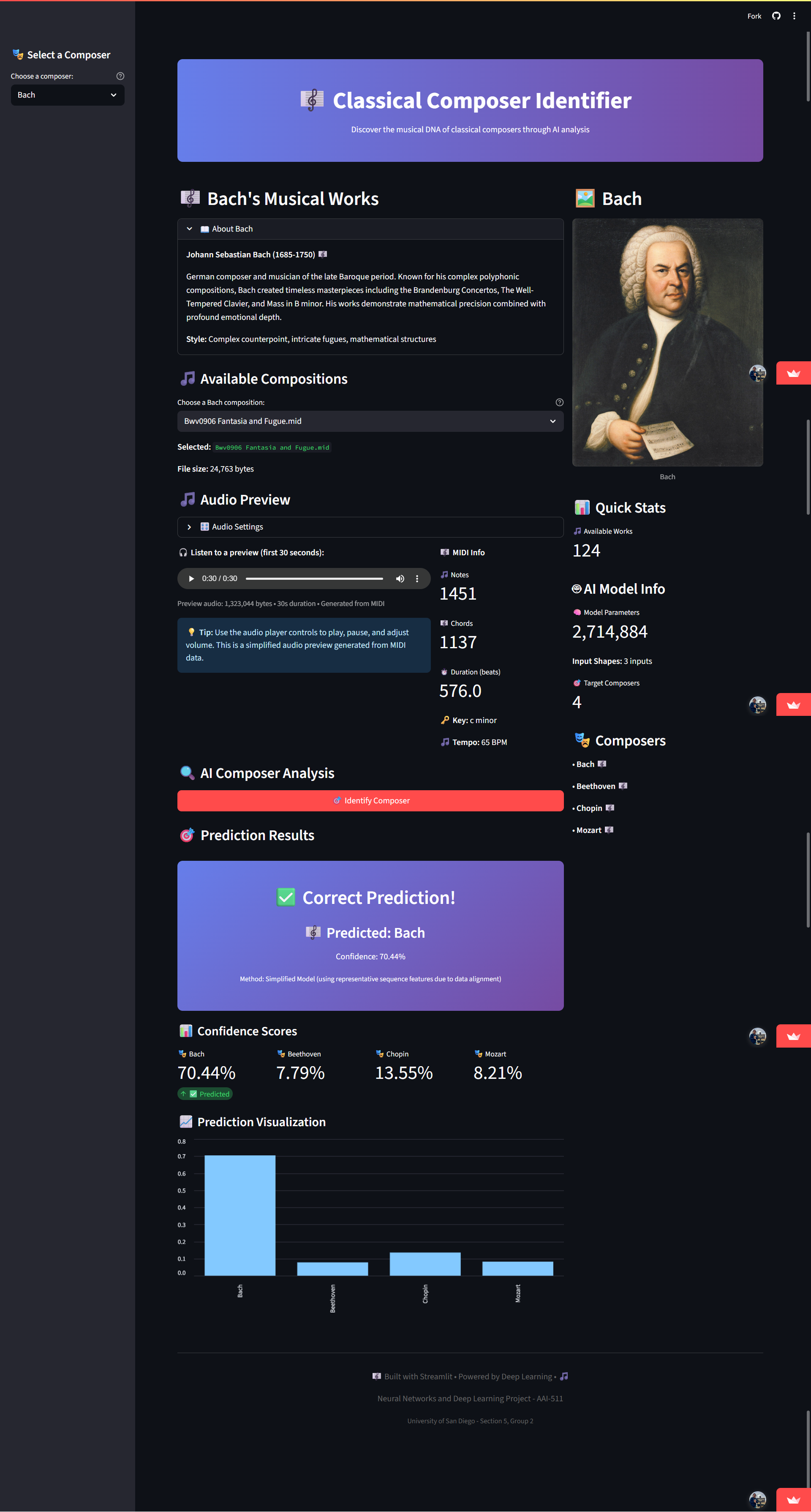

Dataset Summary and Class Distribution Analysis

The dataset contains 1,640 MIDI files across 4 target composers (Bach, Mozart, Beethoven, and Chopin). All files were successfully loaded, filtered for the selected composers, and passed preprocessing without removals, indicating good overall data integrity.

Key Findings from our Visualizations:

Bar Chart – MIDI Files per Composer

- Bach has the largest number of files (1,024), followed by Mozart (257), Beethoven (220), and Chopin (136).

- This shows a significant difference in data availability among composers.

Pie Chart – Proportion of MIDI Files by Composer

- Bach's works make up 62.6% of the dataset.

- Mozart accounts for 15.7%, Beethoven 13.4%, and Chopin only 8.3%.

Data Imbalance Considerations:

The dataset is heavily imbalanced, with Bach dominating the sample size. Such imbalance can bias the deep learning model toward predicting Bach more often, potentially reducing classification accuracy for underrepresented composers like Chopin. This imbalance will need to be addressed during preprocessing or model training possible strategies include data augmentation, class weighting, or sampling techniques to create a more balanced input distribution.

5.1 HarmonyAnalyzer:¶

HarmonyAnalyzer – Purpose and Role in Feature Extraction

The HarmonyAnalyzer is a specialized preprocessing tool we designed to extract high-level harmonic and tonal features from MIDI data before feeding it into the LSTM and CNN composer classification model. These features go beyond note or pitch features by working to quantify how composers use harmony, tonality, and modulation in their works—key stylistic markers for distinguishing between composers. We felt this provided an edge to our model using special domain knowledge.

Note: We also provide in-line code commenting to help detail each harmonic feature and it's musical importance.

Why These Analyses Are Needed¶

Traditional statistical features (e.g., average pitch, tempo, note durations) are important, however some deeper stylistic patterns that define a composer’s “musical fingerprint” seemed pertinent to include.

Classical composers differ greatly in harmonic language, use of consonance/dissonance, modulations, and cadences. Capturing these traits creates a deeper musical theory based feature set, with an aim to improving model performance for composer prediction down the pipeline.

Main Components in Harmony Analyzer Class¶

Chord and Progression Analysis (

analyze_chords_and_progressions)- Extracts total and unique chord counts.

- Calculates chord quality ratios (major, minor, diminished, augmented).

- Detects occurrences of common classical progressions using 'old school' roman numeral analysis(e.g., I–V–vi–IV).

- Measures chord inversion usage and root position frequency.

- Why: Different composers favor specific chord types and progressions, which can be strong stylistic indicators.

Dissonance Analysis (

analyze_dissonance)- Quantifies average, maximum, and variance of dissonance in sliding note windows.

- Measures tritone frequency (a historically important dissonance).

- Why: Composers vary in their tolerance for tension—Baroque composers may use consonance more often, while Romantic composers may use richer dissonances.

Tonality Analysis (

analyze_tonality)- Calculates how often the tonic note/chord appears in melody and bass.

- Tracks cadential tonic usage in phrase endings.

- Why: Staying “close to home” harmonically vs. frequent departures is a defining compositional choice.

Key Modulation Analysis (

analyze_key_modulations)- Detects key changes across the score using multiple key-finding methods.

- Calculates modulation rate, key diversity, stability, and distances between keys on the circle of fifths.

- Why: Some composers (e.g., Beethoven) modulate frequently to explore harmonic space, while others remain harmonically stable.

Cadence Analysis (

analyze_cadences)- Detects authentic (V–I), plagal (IV–I), and deceptive (V–vi) cadences.

- Calculates cadence type ratios and total cadences.

- Why: Cadence preferences can strongly signal the harmonic era and composer identity.

Value for Model Preparation¶

By combining these advanced harmonic features with the statistical ones, the dataset gains musically meaningful, high-level descriptors that directly reflect compositional style. This gives the deep learning model access to nuanced harmonic behavior, improving classification accuracy and interpretability.

class HarmonyAnalyzer:

"""Analyzer for harmony-related features."""

def __init__(self, np_module):

self.np = np_module

self.has_gpu = np_module.__name__ == 'cupy'

# Perfect/major/minor consonances

self.consonant_intervals = {0, 3, 4, 5, 7, 8, 9, 12}

# The More dissonant intervals

self.dissonant_intervals = {1, 2, 6, 10, 11}

# Common classical chord progressions

self.common_progressions = {

'I-V-vi-IV': [(1, 5, 6, 4)],

'vi-IV-I-V': [(6, 4, 1, 5)],

'I-vi-IV-V': [(1, 6, 4, 5)],

'ii-V-I': [(2, 5, 1)],

'I-IV-V-I': [(1, 4, 5, 1)],

'vi-ii-V-I': [(6, 2, 5, 1)]

}

# Circle of fifths for key relationships

self.circle_of_fifths = {

'C': 0, 'G': 1, 'D': 2, 'A': 3, 'E': 4, 'B': 5, 'F#': 6, 'Gb': 6,

'Db': 5, 'Ab': 4, 'Eb': 3, 'Bb': 2, 'F': 1

}

# Relative major/minor relationships

self.relative_keys = {

('C', 'major'): ('A', 'minor'),

('G', 'major'): ('E', 'minor'),

('D', 'major'): ('B', 'minor'),

('A', 'major'): ('F#', 'minor'),

('E', 'major'): ('C#', 'minor'),

('B', 'major'): ('G#', 'minor'),

('F#', 'major'): ('D#', 'minor'),

('Db', 'major'): ('Bb', 'minor'),

('Ab', 'major'): ('F', 'minor'),

('Eb', 'major'): ('C', 'minor'),

('Bb', 'major'): ('G', 'minor'),

('F', 'major'): ('D', 'minor'),

}

# Add reverse mappings

for maj, min in list(self.relative_keys.items()):

self.relative_keys[min] = maj

def analyze_chords_and_progressions(self, score, window_size, overlap, shared_data):

"""Analyze chord progressions patterns.

We should embrace the "old school" musical analysis process here.

Roman rumerals are traditionally used in musical analysis as a means

to evaluate how composers go from one idea to the next, or one

feeling to the next via their chosen chord progressions. How often

composers use different chord qualities, such as major/minor/

or augmented/diminished defines their stylistic tendencies and

compositional choices over time. Chord inversions are related

indicators, as they inform how each note in a harmonic chord is

stacked, representing key variations of musical harmononic analysis.

"""

features = {}

try:

# Use shared data

chords = shared_data.get('chords', [])

key_signature = shared_data.get('key_signature')

if not chords or not key_signature:

return self.empty_harmony_features()

features['total_chords'] = len(chords)

# Use batch processing for better performance

batch_size = min(100, max(1, len(chords) // 2))

# Pre-compute pitchedCommonName for optimization

pitchedCommonNames = [c.pitchedCommonName for c in chords

if hasattr(c, 'pitchedCommonName')]

features['unique_chords'] = len(set(pitchedCommonNames))

# Process chords in batches using thread pool

chord_qualities, roman_numerals, inversions = self._process_chord_batches(

chords, key_signature, batch_size)

# Calculate chord type ratios

quality_counts = Counter(chord_qualities)

chord_count = len(chords) if chords else 1 # Avoid division by zero

features['major_chord_ratio'] = quality_counts.get('major', 0) / chord_count

features['minor_chord_ratio'] = quality_counts.get('minor', 0) / chord_count

features['diminished_chord_ratio'] = quality_counts.get('diminished', 0) / chord_count

features['augmented_chord_ratio'] = quality_counts.get('augmented', 0) / chord_count

# Chord progression analysis

if len(roman_numerals) >= 4:

progression_counts = self._find_chord_progressions(roman_numerals)

features.update(progression_counts)

else:

for prog_name in self.common_progressions:

features[f'progression_{prog_name.lower().replace("-", "_")}_count'] = 0

# Chord inversion analysis

if inversions:

inv_array = self.np.array(inversions, dtype=float)

features['avg_chord_inversion'] = float(self.np.mean(inv_array))

features['root_position_ratio'] = inversions.count(0) / len(inversions)

else:

features['avg_chord_inversion'] = 0

features['root_position_ratio'] = 0

except Exception:

features = self.empty_harmony_features()

return features

def _process_chord_batches(self, chords, key_signature, batch_size):

"""Process chords in batches for better performance."""

def process_chord_batch(chord_batch):

qualities = []

rns = []

invs = []

for c in chord_batch:

try:

qualities.append(c.quality)

rns.append(roman.romanNumeralFromChord(c, key_signature).figure)

invs.append(c.inversion())

except:

qualities.append('unknown')

rns.append('Unknown')

invs.append(0)

return qualities, rns, invs

# Split chords into batches

chord_batches = [chords[i:i+batch_size] for i in range(0, len(chords), batch_size)]

# Process batches in parallel

chord_qualities = []

roman_numerals = []

inversions = []

with ThreadPoolExecutor() as executor:

batch_results = list(executor.map(process_chord_batch, chord_batches))

# Combine results

for qualities, rns, invs in batch_results:

chord_qualities.extend(qualities)

roman_numerals.extend(rns)

inversions.extend(invs)

return chord_qualities, roman_numerals, inversions

def _find_chord_progressions(self, roman_numerals):

"""Find common chord progression patterns."""

progression_counts = {}

# Use a lookup table for faster scale degree extraction

scale_degree_map = {

'I': 1, 'II': 2, 'III': 3, 'IV': 4, 'V': 5, 'VI': 6, 'VII': 7,

'i': 1, 'ii': 2, 'iii': 3, 'iv': 4, 'v': 5, 'vi': 6, 'vii': 7

}

# Pre-process roman numerals to scale degrees efficiently

scale_degrees = []

for rn in roman_numerals:

# Extract first 1-2 characters for faster lookup

rn_start = rn[:2] if len(rn) > 1 else rn[:1]

rn_start = rn_start.upper() # Normalize case

# Fast lookup

if rn_start in scale_degree_map:

scale_degrees.append(scale_degree_map[rn_start])

elif rn_start[0] in scale_degree_map:

scale_degrees.append(scale_degree_map[rn_start[0]])

else:

scale_degrees.append(0) # Unknown

# Convert to numpy array for faster pattern matching

scale_degrees_array = self.np.array(scale_degrees)

# Process each progression pattern efficiently

for prog_name, patterns in self.common_progressions.items():

count = 0

for pattern in patterns:

pattern_length = len(pattern)

if len(scale_degrees) >= pattern_length:

# Use vectorized operations for pattern matching when possible

if self.has_gpu and len(scale_degrees) > 1000:

# GPU-optimized pattern matching

pattern_array = self.np.array(pattern)

matches = 0

# Sliding window implementation optimized for GPU

for i in range(len(scale_degrees) - pattern_length + 1):

window = scale_degrees_array[i:i+pattern_length]

if self.np.array_equal(window, pattern_array):

matches += 1

count += matches

else:

# CPU version with optimized lookups

pattern_tuple = tuple(pattern)

# Use sliding window approach

for i in range(len(scale_degrees) - pattern_length + 1):

if tuple(scale_degrees[i:i+pattern_length]) == pattern_tuple:

count += 1

progression_counts[f'progression_{prog_name.lower().replace("-", "_")}_count'] = count

return progression_counts

def analyze_dissonance(self, score, window_size, overlap, shared_data):

"""Analyze dissonance measurements.

Dissonance in music theory is a measurement of perceptual clashing

or tension between either 2 or more notes, whether they are in

a harmonic setting (i.e. in a triad), or melodically over time.

It's a perceptual concept, as one person might find something more

dissonant than another (experiemental jazz vs. orderly Mozart), yet

even though it's perceptual we have some preconceived notions of

note relationships coresponding to tonal expectations. More dissonance

equates to less harmonicity, and vice versa-- a great measurement

for our classification purposes.

"""

features = {}

try:

# Use shared data

notes = shared_data.get('notes', [])

# Early return for empty notes

if not notes:

return self.empty_dissonance_features()

# Extract all pitches

all_pitches = []

for note in notes:

if hasattr(note, 'pitch'):

all_pitches.append(note.pitch.midi)

if not all_pitches:

return self.empty_dissonance_features()

# Convert to numpy array for faster processing

all_pitches_array = self.np.array(all_pitches)

# Create sliding windows of notes

window_size_notes = 10 # Fixed size for consistent analysis

dissonance_samples = self._analyze_dissonance_windows(all_pitches_array, window_size_notes)

# Calculate statistics

if dissonance_samples:

dissonance_array = self.np.array(dissonance_samples)

features['avg_dissonance'] = float(self.np.mean(dissonance_array))

features['max_dissonance'] = float(self.np.max(dissonance_array))

features['dissonance_variance'] = float(self.np.var(dissonance_array))

else:

features['avg_dissonance'] = 0

features['max_dissonance'] = 0

features['dissonance_variance'] = 0

# Tritone analysis

if len(all_pitches_array) > 1:

# Compute consecutive intervals

intervals = self.np.abs(self.np.diff(all_pitches_array)) % 12

tritone_count = self.np.sum(intervals == 6)

features['tritone_frequency'] = float(tritone_count) / len(notes)

else:

features['tritone_frequency'] = 0

except Exception:

features = self.empty_dissonance_features()

return features

def _analyze_dissonance_windows(self, all_pitches_array, window_size_notes):

# Optimize for large datasets with adaptive chunking

num_chunks = min(os.cpu_count() or 4, 8)

chunk_size = max(10, len(all_pitches_array) // num_chunks)

# Process sliding windows more efficiently

def analyze_windows_batch(start_idx, end_idx):

batch_results = []

for i in range(start_idx, min(end_idx, len(all_pitches_array) - window_size_notes + 1), 3):

window = all_pitches_array[i:i+window_size_notes]

if len(window) < 2:

continue

# Create all pairwise combinations efficiently

if self.has_gpu:

# GPU-optimized version

pitches_col = window.reshape(-1, 1)

pitches_row = window.reshape(1, -1)

interval_matrix = self.np.abs(pitches_col - pitches_row) % 12

# Get upper triangle of matrix excluding diagonal

intervals = interval_matrix[self.np.triu_indices_from(interval_matrix, k=1)]

# Count dissonant intervals

dissonant_count = 0

for interval in intervals:

if int(interval) in self.dissonant_intervals:

dissonant_count += 1

if len(intervals) > 0:

batch_results.append(dissonant_count / len(intervals))

else:

# CPU version

window_dissonance = 0

note_pairs = 0

for j in range(len(window)):

for k in range(j+1, len(window)):

interval_semitones = abs(window[j] - window[k]) % 12

if interval_semitones in self.dissonant_intervals:

window_dissonance += 1

note_pairs += 1

if note_pairs > 0:

batch_results.append(window_dissonance / note_pairs)

return batch_results

# Divide work into chunks

chunk_starts = list(range(0, len(all_pitches_array), chunk_size))

chunk_ends = [min(start + chunk_size, len(all_pitches_array)) for start in chunk_starts]

# Process chunks in parallel

all_results = []

with ThreadPoolExecutor() as executor:

all_results = list(executor.map(analyze_windows_batch, chunk_starts, chunk_ends))

# Flatten results

dissonance_samples = []

for result in all_results:

dissonance_samples.extend(result)

return dissonance_samples

def analyze_tonality(self, score, window_size, overlap, shared_data):

"""Analyze how often the tonic is reached.

This is important as it shows how composers stay close to 'home' key

vs. how often they drift into interludes or other musical structures.

A composer like Mozart more often feels safe in the tonic,

whereas Chopin might drift away more often to add a more drifting

sensation to the composition.

"""

features = {}

try:

key_signature = shared_data.get('key_signature')

notes = shared_data.get('notes', [])

chords = shared_data.get('chords', [])

if not key_signature or not notes:

return self.empty_tonality_features()

tonic_pitch = key_signature.tonic

tonic_name = tonic_pitch.name

# Process notes to count tonic occurrences

tonic_hits, bass_tonic_hits = self._count_tonic_notes(notes, tonic_name)

# Process chords to count tonic chords

tonic_chord_count = self._count_tonic_chords(chords, tonic_name)

# Calculate ratios

total_notes = len(notes) if notes else 1 # Avoid division by zero

features['tonic_frequency'] = tonic_hits / total_notes

features['bass_tonic_frequency'] = bass_tonic_hits / total_notes

features['tonic_chord_ratio'] = tonic_chord_count / len(chords) if chords else 0

# Process phrase endings

phrase_endings = self._identify_phrase_endings(score)

cadential_tonic = 0

for ending_chord in phrase_endings:

try:

if ending_chord.root().name == tonic_name:

cadential_tonic += 1

except:

continue

features['cadential_tonic_ratio'] = cadential_tonic / len(phrase_endings) if phrase_endings else 0

except Exception:

features = self.empty_tonality_features()

return features

def _count_tonic_notes(self, notes, tonic_name):

# Process notes in parallel using batches

def process_notes_batch(note_batch):

tonic_count = 0

bass_tonic_count = 0

for note in note_batch:

try:

if hasattr(note, 'pitch') and note.pitch.name == tonic_name:

tonic_count += 1

elif hasattr(note, 'bass') and note.bass().name == tonic_name:

bass_tonic_count += 1

except:

continue

return tonic_count, bass_tonic_count

# Split into batches

batch_size = 100

note_batches = [notes[i:i+batch_size] for i in range(0, len(notes), batch_size)]

# Process batches in parallel

tonic_hits = 0

bass_tonic_hits = 0

with ThreadPoolExecutor() as executor:

# Process notes

note_results = list(executor.map(process_notes_batch, note_batches))

for t_count, bt_count in note_results:

tonic_hits += t_count

bass_tonic_hits += bt_count

return tonic_hits, bass_tonic_hits

def _count_tonic_chords(self, chords, tonic_name):

# Process chords in parallel

def process_chords_batch(chord_batch):

tonic_chord_count = 0

for c in chord_batch:

try:

if c.root().name == tonic_name:

tonic_chord_count += 1

except:

continue

return tonic_chord_count

# Split into batches

batch_size = 100

chord_batches = [chords[i:i+batch_size] for i in range(0, len(chords), batch_size)]

# Process batches in parallel

tonic_chord_count = 0

with ThreadPoolExecutor() as executor:

chord_results = list(executor.map(process_chords_batch, chord_batches))

tonic_chord_count = sum(chord_results)

return tonic_chord_count

def analyze_key_modulations(self, score, window_size, overlap, shared_data):

"""Count key modulations using windowed key analysis.

Modulation is when we move from key center to key center, i.e. from

C Major to Ab Minor (a harmonically unrelated key signature).

Composers in classical music tend to move from key to key when they

want to diverge from a mood or a tonal center into a new avenue of

expressions. This is a feature more common in certain composers,

and a valuable addition to measure from our MIDI files.

"""

features = {}

try:

duration = shared_data.get('total_duration', 0) if shared_data else score.duration.quarterLength

if duration == 0 or duration < window_size:

return self.empty_modulation_features()

# Get note events for analysis

note_events = self._extract_note_events(score)

if len(note_events) < 10: # Need minimum notes for analysis

return self.empty_modulation_features()

# Analyze keys using multiple methods

window_keys = self._analyze_keys_robust(score, note_events, window_size, overlap, duration)

if len(window_keys) < 2:

return self.empty_modulation_features()

# Filter and smooth key sequence

smoothed_keys = self._smooth_key_sequence(window_keys)

# Detect meaningful modulations

modulations = self._detect_meaningful_modulations(smoothed_keys)

# Calculate features

features = self._calculate_modulation_features(modulations, smoothed_keys, duration)

except Exception as e:

print(f"Error in key modulation analysis: {e}")

features = self.empty_modulation_features()

return features

def _extract_note_events(self, score):

"""Extract note events with timing information"""

note_events = []

for element in score.recurse():

if hasattr(element, 'pitch') and hasattr(element, 'offset'):

note_events.append({

'pitch': element.pitch.midi,

'pitch_class': element.pitch.pitchClass,

'offset': float(element.offset),

'duration': float(element.duration.quarterLength)

})

# Sort by onset time

note_events.sort(key=lambda x: x['offset'])

return note_events

def _analyze_keys_robust(self, score, note_events, window_size, overlap, total_duration):

"""

Robust key analysis using multiple methods and validation

"""

step_size = window_size * (1 - overlap)

window_keys = []

current_offset = 0

while current_offset < total_duration - window_size/2:

window_end = current_offset + window_size

# Get notes in current window

window_notes = [

note for note in note_events

if current_offset <= note['offset'] < window_end

]

if len(window_notes) < 3: # Need minimum notes

current_offset += step_size

continue

# Method 1: Krumhansl-Schmuckler key-finding

key1 = self._krumhansl_schmuckler_key(window_notes)

# Method 2: Chord-based key finding

key2 = self._chord_based_key_finding(score, current_offset, window_end)

# Method 3: Simple pitch class distribution

key3 = self._pitch_class_key_finding(window_notes)

# Consensus or best guess

final_key = self._consensus_key([key1, key2, key3])

if final_key:

window_keys.append({

'key': final_key,

'offset': current_offset,

'confidence': self._calculate_key_confidence(window_notes, final_key)

})

current_offset += step_size

return window_keys

def _krumhansl_schmuckler_key(self, window_notes):

"""Krumhansl-Schmuckler key-finding algorithm"""

if not window_notes:

return None

# Krumhansl-Schmuckler profiles

major_profile = [6.35, 2.23, 3.48, 2.33, 4.38, 4.09, 2.52, 5.19, 2.39, 3.66, 2.29, 2.88]

minor_profile = [6.33, 2.68, 3.52, 5.38, 2.60, 3.53, 2.54, 4.75, 3.98, 2.69, 3.34, 3.17]

# Calculate pitch class distribution

pc_distribution = [0] * 12

for note in window_notes:

pc_distribution[note['pitch_class']] += note['duration']

# Normalize

total = sum(pc_distribution)

if total == 0:

return None

pc_distribution = [x/total for x in pc_distribution]

# Find best key

best_correlation = -1

best_key = None

for tonic in range(12):

# Major key correlation

major_corr = self._correlation(

pc_distribution,

major_profile[tonic:] + major_profile[:tonic]

)

# Minor key correlation

minor_corr = self._correlation(

pc_distribution,

minor_profile[tonic:] + minor_profile[:tonic]

)

if major_corr > best_correlation:

best_correlation = major_corr

best_key = (self._pc_to_name(tonic), 'major')

if minor_corr > best_correlation:

best_correlation = minor_corr

best_key = (self._pc_to_name(tonic), 'minor')

return best_key if best_correlation > 0.6 else None

def _chord_based_key_finding(self, score, start_offset, end_offset):

"""Find key based on chord progressions in the window"""

try:

# Extract chords from the window

window_stream = score.measures(

numberStart=int(start_offset/4) + 1,

numberEnd=int(end_offset/4) + 1

)

# Analyze chords

chords = window_stream.chordify()

chord_symbols = []

for chord in chords.recurse().getElementsByClass('Chord'):

if len(chord.pitches) >= 2:

chord_symbols.append(chord.root().pitchClass)

if not chord_symbols:

return None

# Simple chord-to-key mapping

chord_counts = Counter(chord_symbols)

most_common_root = chord_counts.most_common(1)[0][0]

# Heuristic: assume major key based on most common chord root

return (self._pc_to_name(most_common_root), 'major')

except:

return None

def _pitch_class_key_finding(self, window_notes):

"""Simple pitch class distribution key finding"""

if not window_notes:

return None

pc_counts = Counter(note['pitch_class'] for note in window_notes)

if not pc_counts:

return None

# Find most common pitch class

tonic_pc = pc_counts.most_common(1)[0][0]

# Simple heuristic: check if it's more likely major or minor

# Look for characteristic intervals

total_notes = len(window_notes)

third_major = (tonic_pc + 4) % 12

third_minor = (tonic_pc + 3) % 12

major_evidence = pc_counts.get(third_major, 0) / total_notes

minor_evidence = pc_counts.get(third_minor, 0) / total_notes

mode = 'major' if major_evidence >= minor_evidence else 'minor'

return (self._pc_to_name(tonic_pc), mode)

def _consensus_key(self, key_candidates):

"""Get consensus from multiple key-finding methods"""

valid_keys = [k for k in key_candidates if k is not None]

if not valid_keys:

return None

if len(valid_keys) == 1:

return valid_keys[0]

# Count votes

key_votes = Counter(valid_keys)

# If there's a clear winner, use it

most_common = key_votes.most_common(1)[0]

if most_common[1] > 1:

return most_common[0]

# Otherwise, prefer Krumhansl-Schmuckler result (first in list)

return valid_keys[0]

def _smooth_key_sequence(self, window_keys, min_duration=2):

"""Remove brief key changes that are likely analysis errors"""

if len(window_keys) <= 2:

return window_keys

smoothed = []

i = 0

while i < len(window_keys):

current_key = window_keys[i]['key']

# Look ahead to see how long this key lasts

duration = 1

j = i + 1

while j < len(window_keys) and window_keys[j]['key'] == current_key:

duration += 1

j += 1

# If this key change is too brief, skip it

if duration >= min_duration or i == 0 or i == len(window_keys) - 1:

smoothed.extend(window_keys[i:i+duration])

i = j if j > i + 1 else i + 1

return smoothed

def _detect_meaningful_modulations(self, smoothed_keys):

"""Detect meaningful modulations (not just relative major/minor changes)"""

if len(smoothed_keys) < 2:

return []

modulations = []

prev_key = smoothed_keys[0]['key']

for key_info in smoothed_keys[1:]:

current_key = key_info['key']

if current_key != prev_key:

# Check if this is a meaningful modulation

modulation_type = self._classify_modulation(prev_key, current_key)

if modulation_type != 'relative': # Filter out relative major/minor

modulations.append({

'from_key': prev_key,

'to_key': current_key,

'type': modulation_type,

'offset': key_info['offset'],

'distance': self._key_distance(prev_key, current_key)

})

prev_key = current_key

return modulations

def _classify_modulation(self, key1, key2):

"""Classify the type of modulation"""

if key1 == key2:

return 'none'

# Check if relative major/minor

if key1 in self.relative_keys and self.relative_keys[key1] == key2:

return 'relative'

# Check circle of fifths distance

distance = self._key_distance(key1, key2)

if distance <= 1:

return 'close' # Adjacent on circle of fifths

elif distance <= 2:

return 'medium'

else:

return 'distant'

def _key_distance(self, key1, key2):

"""Calculate distance between keys on circle of fifths"""

try:

pos1 = self.circle_of_fifths.get(key1[0], 0)

pos2 = self.circle_of_fifths.get(key2[0], 0)

# Handle wraparound

distance = min(abs(pos1 - pos2), 12 - abs(pos1 - pos2))

# Add penalty for major/minor difference

if key1[1] != key2[1]:

distance += 0.5

return distance

except:

return 6 # Maximum distance

def _calculate_modulation_features(self, modulations, smoothed_keys, duration):

"""Calculate comprehensive modulation features"""

features = {}

# Basic counts

features['key_modulation_count'] = len(modulations)

features['modulation_rate'] = len(modulations) / duration if duration > 0 else 0

features['avg_modulations_per_minute'] = (len(modulations) / duration) * 60 if duration > 0 else 0

# Key diversity

unique_keys = set(key_info['key'] for key_info in smoothed_keys)

features['unique_keys'] = len(unique_keys)

# Key stability (percentage of time in most common key)

if smoothed_keys:

key_counts = Counter(key_info['key'] for key_info in smoothed_keys)

most_common_count = key_counts.most_common(1)[0][1]

features['key_stability'] = most_common_count / len(smoothed_keys)

else:

features['key_stability'] = 1.0

# Modulation characteristics

if modulations:

distances = [mod['distance'] for mod in modulations]

features['avg_modulation_distance'] = np.mean(distances)

features['max_modulation_distance'] = max(distances)

# Count modulation types

types = [mod['type'] for mod in modulations]

type_counts = Counter(types)

features['close_modulations'] = type_counts.get('close', 0)

features['medium_modulations'] = type_counts.get('medium', 0)

features['distant_modulations'] = type_counts.get('distant', 0)

else:

features['avg_modulation_distance'] = 0

features['max_modulation_distance'] = 0

features['close_modulations'] = 0

features['medium_modulations'] = 0

features['distant_modulations'] = 0

return features

# Helper methods

def _correlation(self, x, y):

"""Calculate Pearson correlation coefficient"""

if len(x) != len(y):

return 0

n = len(x)

if n == 0:

return 0

sum_x = sum(x)

sum_y = sum(y)

sum_xy = sum(x[i] * y[i] for i in range(n))

sum_x2 = sum(x[i] ** 2 for i in range(n))

sum_y2 = sum(y[i] ** 2 for i in range(n))

denominator = ((n * sum_x2 - sum_x ** 2) * (n * sum_y2 - sum_y ** 2)) ** 0.5

if denominator == 0:

return 0

return (n * sum_xy - sum_x * sum_y) / denominator

def _pc_to_name(self, pc):

"""Convert pitch class number to note name"""

names = ['C', 'C#', 'D', 'Eb', 'E', 'F', 'F#', 'G', 'Ab', 'A', 'Bb', 'B']

return names[pc % 12]

def _calculate_key_confidence(self, window_notes, key):

"""Calculate confidence score for a key assignment"""

if not key or not window_notes:

return 0.0

# Simple confidence based on how well the notes fit the key

tonic_pc = self.circle_of_fifths.get(key[0], 0)

# Key notes for major/minor

if key[1] == 'major':

key_pcs = [(tonic_pc + i) % 12 for i in [0, 2, 4, 5, 7, 9, 11]]

else:

key_pcs = [(tonic_pc + i) % 12 for i in [0, 2, 3, 5, 7, 8, 10]]

in_key = sum(1 for note in window_notes if note['pitch_class'] in key_pcs)

total = len(window_notes)

return in_key / total if total > 0 else 0.0

def empty_modulation_features(self):

"""Return empty/default modulation features"""

return {

'key_modulation_count': 0,

'modulation_rate': 0.0,

'avg_modulations_per_minute': 0.0,

'unique_keys': 1,

'key_stability': 1.0,

'avg_modulation_distance': 0.0,

'max_modulation_distance': 0.0,

'close_modulations': 0,

'medium_modulations': 0,

'distant_modulations': 0

}

def analyze_cadences(self, score, window_size, overlap, shared_data):

"""Analyze cadential patterns.

Cadences show how a composer resolves a phrase, or ends an idea.

In particular eras of classical music, cadences are quite predicatable.

For example more western religious music styles utilized plagal cadences

and authentic cadences to give a feeling of finality to a section of

music. We use three types for this excerise, but there are a whole

number of others that could be used for further analaysis. We also

count how many cadences there are as it shows the composer's tenden-

cies of resolution over time. How often one resolves a phrase should

measurably correlate to a composer's feeling of arriving at home base.

"""

features = {}

try:

# Use shared data

chords = shared_data.get('chords', [])

key_signature = shared_data.get('key_signature')

if len(chords) < 2 or not key_signature:

return self.empty_cadence_features()

# Detect cadences

authentic, plagal, deceptive = self._detect_cadences(chords, key_signature)

total_cadences = authentic + plagal + deceptive

# Calculate ratios

if total_cadences > 0:

features['authentic_cadence_ratio'] = authentic / total_cadences

features['plagal_cadence_ratio'] = plagal / total_cadences

features['deceptive_cadence_ratio'] = deceptive / total_cadences

else:

features['authentic_cadence_ratio'] = 0

features['plagal_cadence_ratio'] = 0

features['deceptive_cadence_ratio'] = 0

features['total_cadences'] = total_cadences

except Exception:

features = self.empty_cadence_features()

return features

def _detect_cadences(self, chords, key_signature):

# Optimize cadence detection with batch processing

batch_size = min(50, max(1, len(chords) // 4))

def analyze_chord_pairs(start_idx, end_idx):

authentic = 0

plagal = 0

deceptive = 0

for i in range(start_idx, min(end_idx, len(chords) - 1)):

try:

current_rn = roman.romanNumeralFromChord(chords[i], key_signature)

next_rn = roman.romanNumeralFromChord(chords[i+1], key_signature)

# V-I (authentic cadence)

if current_rn.scaleDegree == 5 and next_rn.scaleDegree == 1:

authentic += 1

# IV-I (plagal cadence)

elif current_rn.scaleDegree == 4 and next_rn.scaleDegree == 1:

plagal += 1

# V-vi (deceptive cadence)

elif current_rn.scaleDegree == 5 and next_rn.scaleDegree == 6:

deceptive += 1

except:

continue

return authentic, plagal, deceptive

# Define batch boundaries

batch_starts = list(range(0, len(chords) - 1, batch_size))

batch_ends = [start + batch_size for start in batch_starts]

# Process batches in parallel

authentic_cadences = 0

plagal_cadences = 0

deceptive_cadences = 0

with ThreadPoolExecutor() as executor:

results = list(executor.map(analyze_chord_pairs, batch_starts, batch_ends))

# Combine results

for auth, plag, dec in results:

authentic_cadences += auth

plagal_cadences += plag

deceptive_cadences += dec

return authentic_cadences, plagal_cadences, deceptive_cadences

def _identify_phrase_endings(self, score):

"""Identify likely phrase ending points.

A helper method to see if we can determine where phrases end.

This could be more comprehensive, but for now let's measure typical

phrase spanning 4 or 8 bars.

"""

# Simplified: assume phrase endings at measure boundaries with rests or longer notes

phrase_endings = []

try:

measures = score.parts[0].getElementsByClass('Measure') if score.parts else []

# Optimize for large measure sets

if len(measures) > 100:

# Only check measures at phrase boundaries (every 4th or 8th measure)

phrase_indices = [i for i in range(len(measures))

if (i + 1) % 4 == 0 or (i + 1) % 8 == 0]

# Process only relevant measures

for i in phrase_indices:

measure = measures[i]

chords_in_measure = measure.getElementsByClass(chord.Chord)

if chords_in_measure:

phrase_endings.append(chords_in_measure[-1])

else:

# For smaller sets, process normally

for i, measure in enumerate(measures):

if (i + 1) % 4 == 0 or (i + 1) % 8 == 0: # Assume 4 or 8-bar phrases

chords_in_measure = measure.getElementsByClass(chord.Chord)

if chords_in_measure:

phrase_endings.append(chords_in_measure[-1])

except:

pass

return phrase_endings

def empty_harmony_features(self):

return {

'total_chords': 0,

'unique_chords': 0,

'major_chord_ratio': 0,

'minor_chord_ratio': 0,

'diminished_chord_ratio': 0,

'augmented_chord_ratio': 0,

'avg_chord_inversion': 0,

'root_position_ratio': 0,

'progression_i_v_vi_iv_count': 0,

'progression_vi_iv_i_v_count': 0,

'progression_i_vi_iv_v_count': 0,

'progression_ii_v_i_count': 0,

'progression_i_iv_v_i_count': 0,

'progression_vi_ii_v_i_count': 0

}

def empty_dissonance_features(self):

return {

'avg_dissonance': 0,

'max_dissonance': 0,

'dissonance_variance': 0,

'tritone_frequency': 0

}

def empty_tonality_features(self):

return {

'tonic_frequency': 0,

'bass_tonic_frequency': 0,

'tonic_chord_ratio': 0,

'cadential_tonic_ratio': 0

}

def empty_modulation_features(self):

return {

'key_modulation_count': 0,

'modulation_rate': 0,

'unique_keys': 1,

'key_stability': 1,